Welcome pixel hustler! If you are new to this site and seeking digital colour comprehension, you might as well start at Question #1…

- Question #32: Does the F*cking “Colour” of the Light Change When We Increase or Decrease the Quantity of Light?As we look back to the past two questions, it is hopefully more evident that no simple path as forwarded… Read more: Question #32: Does the F*cking “Colour” of the Light Change When We Increase or Decrease the Quantity of Light?

- Question #31: What the F*ck is Colour?There’s a lurking question sitting atop the pile of these posts, which hopefully has been emerging up and out of… Read more: Question #31: What the F*ck is Colour?

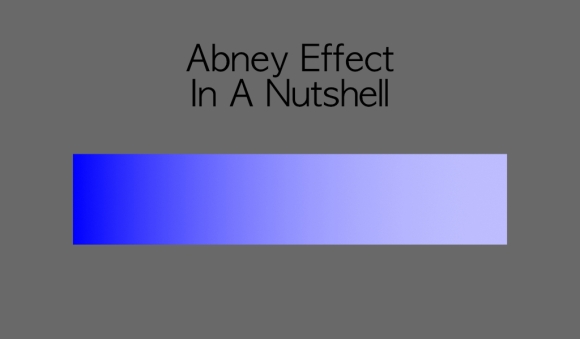

- Question #30: What is the F*cking “Colour” of Light?Hello. I just wanted to let you know that the dimwitted author set their password to “password”. This left a… Read more: Question #30: What is the F*cking “Colour” of Light?

- Questions to the Editor #1: What the F*ck is a Picture?I often receive quite a few questions asking me about my particular spin on colourimetry, pictures, and vision, from folks… Read more: Questions to the Editor #1: What the F*ck is a Picture?

- Question #29: What the F*ck is Intensity, Again?Following on from Question #28’s Rashomon inspired retelling, we are going to look again at what Question #2 brought to… Read more: Question #29: What the F*ck is Intensity, Again?

- Question #28: What the F*ck is the Difference Between Affect and Effect? (And What the F*ck is Rashomon?)Rashomon was a film that offered a unique multiple recounting of a horrible incident. Why am I bringing it up… Read more: Question #28: What the F*ck is the Difference Between Affect and Effect? (And What the F*ck is Rashomon?)

- Question #27: What the F*ck is Perceptual Colour?Remember how I promised years ago that this series would supply the typical digital image maker with fundamental concepts to… Read more: Question #27: What the F*ck is Perceptual Colour?

- Question #26: What the F*ck is Stimulus Colour?Phew… it’s been a while dear friend. And with that repose away from the insanity of digital colour, it seems… Read more: Question #26: What the F*ck is Stimulus Colour?

- Question #25: What the F*ck is Alpha?Question #25: What is alpha? Answer #25: Yet another dumb f*cking Greek glyph label that a math nerd arbitrarily decided… Read more: Question #25: What the F*ck is Alpha?

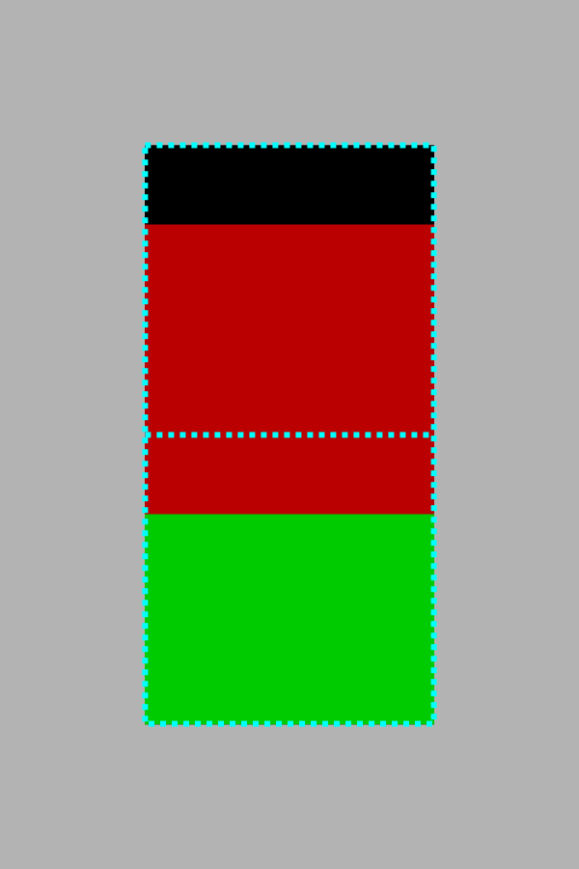

- Question #24: How the F*ck Do We Move a Red Pixel Down and Block Another Pixel’s Emission?Question #24: How can we move the red pixel from Question #23 down and block the green pixel? To answer… Read more: Question #24: How the F*ck Do We Move a Red Pixel Down and Block Another Pixel’s Emission?

- Question #23: How the F*ck Do We Move a Red Pixel Down Over Another Colour?We’ve been talking about “pixels” in terms of little squares that can emit light. I’d like to point out that… Read more: Question #23: How the F*ck Do We Move a Red Pixel Down Over Another Colour?

- Question #22: How the F*ck do we Move a Red Pixel?In the last post, we took a very high level peek at what might happen in a code value to… Read more: Question #22: How the F*ck do we Move a Red Pixel?

- Question #21: How the F*ck do we Put a Red Pixel on the Screen?If you’ve been following this series of questions, you are probably genuinely wondering why the hell we have taken an… Read more: Question #21: How the F*ck do we Put a Red Pixel on the Screen?

- Question #20: What the F*ck is a Quantity of Light?We’ve been talking about radiometric light ratios here for the most part, specifically regarding the final output from a display.… Read more: Question #20: What the F*ck is a Quantity of Light?

- Question #19: What the F*ck is a “Gamut”?Phew. It’s been a long time coming, but the time we have invested is starting to pay off! We can… Read more: Question #19: What the F*ck is a “Gamut”?

- Question #18: What the F*ck is the Missing Axis in a Chromaticity Diagram?We’ve touched on the importance of the CIE’s chromaticity model, and its relationship to visible light spectra as the single… Read more: Question #18: What the F*ck is the Missing Axis in a Chromaticity Diagram?

- Question #17: What the F*ck is a Colour Space?Sixteen questions! It has taken us sixteen questions to even begin to describe the term “Colour Space” with any degree… Read more: Question #17: What the F*ck is a Colour Space?

- Question #16: What the F*ck is the Triangle Thingy in the Chromaticity Diagram?Recall back to Question #13 where we explored how chromaticities are discussed. You may have wondered where that triangle went,… Read more: Question #16: What the F*ck is the Triangle Thingy in the Chromaticity Diagram?

- Question #15: What the F*ck is “Perceptually Uniform”?We have laid some tremendously important groundwork in the most recent questions. Within that, even if you have seen or… Read more: Question #15: What the F*ck is “Perceptually Uniform”?

- Question #14: What the F*ck is “White”?Phew. It’s been quite a few questions to be able to finally, just about, almost, kinda, sorta discuss what is… Read more: Question #14: What the F*ck is “White”?

- Question #13: What the F*ck is a Chromaticity?Another big word for someone who likely only wants to focus on painting on their iPad Pro. The bad news… Read more: Question #13: What the F*ck is a Chromaticity?

- Question #12: How the F*ck Can We Talk About Light “Intensity”?Brighter? Lighter? More light? Less light? Where the hell does physics and energy fit into all of this? Arrgh. If… Read more: Question #12: How the F*ck Can We Talk About Light “Intensity”?

- Question #11: How the F*ck Can We Talk About “Colour”?Given we’ve hit a milestone in that we are approaching the teens in our question count, the time has come… Read more: Question #11: How the F*ck Can We Talk About “Colour”?

- Question #10: Why the F*ck Do We Bother With Linear and Nonlinear Encodings At All?Hard to believe we’ve hit Question #10. Hopefully you are all finding the series useful. Nothing is more valuable than… Read more: Question #10: Why the F*ck Do We Bother With Linear and Nonlinear Encodings At All?

- Question #9: Why the F*ck is Linear Light Important for a Digital Artist?In our last question, we took a quick dive into discussing what a particular type of linear, display linear, is… Read more: Question #9: Why the F*ck is Linear Light Important for a Digital Artist?

- Question #8: What the F*ck Type of “Linear” Have We Been Assuming?I’m going to spoil our trend of not answering questions with an actual answer, that is somewhat not-too-secretly buried it… Read more: Question #8: What the F*ck Type of “Linear” Have We Been Assuming?

- Question #7: What the F*ck Does “Linear” Mean?This is perhaps one of the most ugly questions we are going to tackle, and I’ll warn you up front,… Read more: Question #7: What the F*ck Does “Linear” Mean?

- Question #6: What the F*ck is a Transfer Function?Whoa. There’s a term that you probably haven’t bumped into before, eh? You’ve been pushing pixels for how long, and… Read more: Question #6: What the F*ck is a Transfer Function?

- Question #5: What the F*ck does 0.5 mean?Continuing along the analogy of mixing paints, the colours of the paints matter. If we ask two people to pick… Read more: Question #5: What the F*ck does 0.5 mean?

- Question #4: What the F*ck are the Colours of the Three Lights?Have you ever taken a random set of paints and started mixing them? If you have, you probably have realized… Read more: Question #4: What the F*ck are the Colours of the Three Lights?

- Question #3: Does the F*cking “Colour” of the Light Change When We Increase or Decrease the Quantity of Light?With the first couple of questions out of the way, we have a concrete, albeit simplified, understanding as to what… Read more: Question #3: Does the F*cking “Colour” of the Light Change When We Increase or Decrease the Quantity of Light?

- Question #2: What the F*ck Does 0.6 Mean?As we learned through our entry question #1, changing the value of an RGB slider changes the intensity of light… Read more: Question #2: What the F*ck Does 0.6 Mean?

- Question #1: What the F*ck Happens When We Change an RGB Slider Value?For reasons that are still somewhat alien and strange to me, I get weekly emails reaching out from digital image… Read more: Question #1: What the F*ck Happens When We Change an RGB Slider Value?

15 replies on “The Hitchhiker's Guide to Digital Colour”

Hello there!

This content is Truly wonderful.

I had some muddy understanding of how a color is handled digitally. finding proper, non bullsh*t explanation is quiet a quest.

thanks for the hard work, I hope to read more of it soon.

PS: reading the lasts couple of posts I had cold sweat running down my spine, thinking about how of P3 display would “stretch” sRGB code value instead of using an intermediary transfer function when decoding my favorite cats pictures… is it what is happening??? are we doomed?!?!?

LikeLike

Welcome Charlie, and thanks for the kind words.

Regarding your horror, you have about nailed it. The good news is that one operating system is properly colour managed. The bad news is that the others are not.

On one operating system, almost all items are managed. On the others, it varies software to software. That means you can indeed expect the sRGB values to simply be blasted out “as is” or wrongly frequently.

And remember, transfer functions only control the intensity of light. They can’t change the chromaticities of the lights, which requires a different form of a transform. In fact, Apple’s Display P3 colour space uses the exact same transfer function as sRGB! So transfer functions along can’t fix things!

It’s great to see folks with enough foundational concepts to arrive at their own inferences. That’s amazing!

LikeLiked by 1 person

Hello, I just wanted to say that I appreciate your hard work creating Filmic, answering questions on StackExchange and other sites and summing up the knowledge here. You are a hero! 🙂 I have learned a lot using those resources.

LikeLike

Appreciate the thought. The hard work though, comes from a collective raising of understanding. Hopefully this is happening.

LikeLiked by 1 person

for a non native speaker that’s a nightmare to read

LikeLike

I’d love to have a translation to make it easier! Sadly, I can barely speak my own language…

LikeLike

I mean there are a lot of expressions I’m not used to, and I have trouble to distinguish what is really informative. it would be possible to summarize main points?

LikeLike

It’s barely a three minute read, of which a summary of a summary seems to yield little. There are no assurances of communication across language barriers, sadly.

LikeLike

Hello! Every time I come back to check info and reread chapters I am instantly reminded of how lucky we are for having this. Thank you so much Troy.

For the past few months I have been reading other books and websites regarding this topic and I believe (I truly hope so) that some of the core concepts are starting to latch on. I have tried my best to follow along the different topics with Houdini and Nuke open to feel it in my own pixels and practically understand how theory is applied. But it is here where I have been stuck for some time: applying all the theory in a Nuke (for example) real workflow. I have been looking into Foundry’s own tutorials on Color Management but they don’t go too in depth.

For this reason I was wondering if you knew any books, sites, videos you could recommend covering how to apply all the knowledge covered here into a software workflow. I have been finding quite hard and labyrinthine to take the step from being able to comprehend the core concepts, understand and house keep OCIO configs and knowing that I am possibly missing something, to actually using them to my advantage in real scenes. Without examples on real cases it is quite complicated to know when I am messing up or when I am hitting the note.

Thanks again. Can’t wait for the next chapter of hg2dc!

LikeLike

Absolutely flattered that you have found any of this useful.

As you have probably discovered, the amount of reliable information of sufficient utility out in the wild is horrifically buried under layers of nonsense, numbers, and often times blind appeals to authority. Couple that with the fact that every single curious mind comes at the subject from a different vantage, and can stumble or get hung up in unique ways. This makes it incredibly challenging to suggest a single book or resource.

Worse, software largely is a mess. Plenty of things are brain wormed with some of the protocols peddled by the massive studios and corporations, or algorithms that are totally busted up rubbish. None of it works. Most of it is nonsense.

The main thing that one can do to insulate themselves from the confusion miasma is try to build up a nuts and bolts understanding. I’d be so bold as to say drawing a distinguishing idea between a “stimulus”, versus a “colour”, is an absolutely gargantuan step to sniffing out garbage. If we firmly locate the creation, perception, and understanding of colour as something that is generated in the human perceptual system, it allows us to immediately second guess nonsense that juggles numbers around, or treats electromagnetic radiation as colour.

To that end, I can think of perhaps no better singular tome to at least get one thinking in the right direction than Ralph Evans’ “The Perception of Color”. While it can be incredibly expensive to purchase a hard copy, there are rumours of a PDF lurking out in the interwebs.

I would be absolutely remiss to not also link you to Dr. David Briggs’ site http://www.huevaluechroma.com. Not only does Briggs cover things in a very observer-image-author vantage, his work covers very contemporary explorations of many often overlooked concepts. It also helps to expose readers to the various models, as well as practical examples to showcase how incredible some of all of this is.

Those are probably two very good entry points to round out understanding, and from there, it can be easier to lift one out of the quagmire of number fscking.

Remember… literally no one on the planet understands how our vision works! Also remember that a majority of the research up to around 1985, often times funded by Kodak, was done using tools that are eclipsed several thousand times over by the device you are reading this on. Their wisdom came from experience and insightful thought. Keep that in mind when you feel you are getting lost in the catacombs of vision, image formation, and numbers!

LikeLike

Hello, love the posts. As a spectral rendering enthusiast, I’m curious if you ever plan to talk about spectral renderers at all, even as an aside. Since you mentioned RGB renderers and some of their downsides. Anyways, I look forward to the next post.

LikeLike

Thanks for the kind words.

Spectral rendering is a fascinating topic, but the more important one has nothing to do with RGB nor spectral rendering. These constructs try to emulate “light transport” to varying degrees, including the somewhat ridiculous idea that a photometric system such as RGB can do so at all given that it is firmly entrenched in photometry.

The much more pressing discussion I hope to stoke the imagination on, is the *output* of these models. No, not the completely underwhelming radiometric or photometric datasets they generate via rendering, but rather how we create pictures using that data as but one ingredient.

The last couple of posts have been slowly inching in that direction, as hopefully folks can see. In traversing this landscape, we also are forced to reconcile some incredibly woeful misinformation or seductive underlying belief structures.

I believe very strongly that a focus on *what a picture is* can help to guide us in our understanding of human cognition, of which visual cognition is but one artificial demarcation facet.

Keep asking the questions. We need more questions.

LikeLike

Hi! I absolutely love this resource, I’ve found it incredibly useful to get a non-BS understanding of colour.

One question I have is that some Rec. 709 images (such as the ones in your Colourimetric Test Imagery repo on github) have negative values. What’s up with that? How should negative values be handles by tonemappers/display transforms?

LikeLike

Thanks so much for the comment.

Let’s see…

Think of colourimetry as an encoding system. We can encode a “coordinate” using negative values.

Relative to the encoding system, such as the purity available to the BT.709 medium, the negative values are bogus nonsense and mean nothing within that system; it is impossible to express a purity “more pure” than the maximally pure value, or “more emissive” than the maximal emission. Relative to a Standard Observer encoding model however, the coordinate can be “meaningful”.

> How should negative values be handles by tonemappers/display transforms?

It’s a great question and the answer is *no one has a shred of a clue*. The reasons are many. To name a few:

* No one understands how pictures work.

* Negative values indicate stimulus that is “purer” than other values, and how to handle that without breaking neurophysiological mechanics is incredibly complex. We really are in an infancy of understanding. Maybe pre-infancy.

A “clip” of a negative lobe, because it represents *negative stimulus*, ends up *artificially increasing the stimulus* and “deforming” the “intention”. But none of that really matters too much until we get minds thinking about what pictures are, how they work, and what mechanics are being leaned into.

The vast majority of the brain wormed discussion around colourimetry is so utterly ill framed that it amounts to noodleberries humping footballs to collect a bag. This includes books written on the subject of “gamut mapping”, because the basis is so utterly disconnected from neurophysiological mechanics so as to be nothing more than incantations of magic trying to control the ether.

For anyone reading this, skip all the rubbish around colourimetry, and focus on the minds like Eric Schwartz and Stephen Grossberg. That’s where the real work rests before us, and we haven’t even begun to scratch the surface with respect to pictures and their formation.

LikeLike

baller

LikeLike